On this page

article

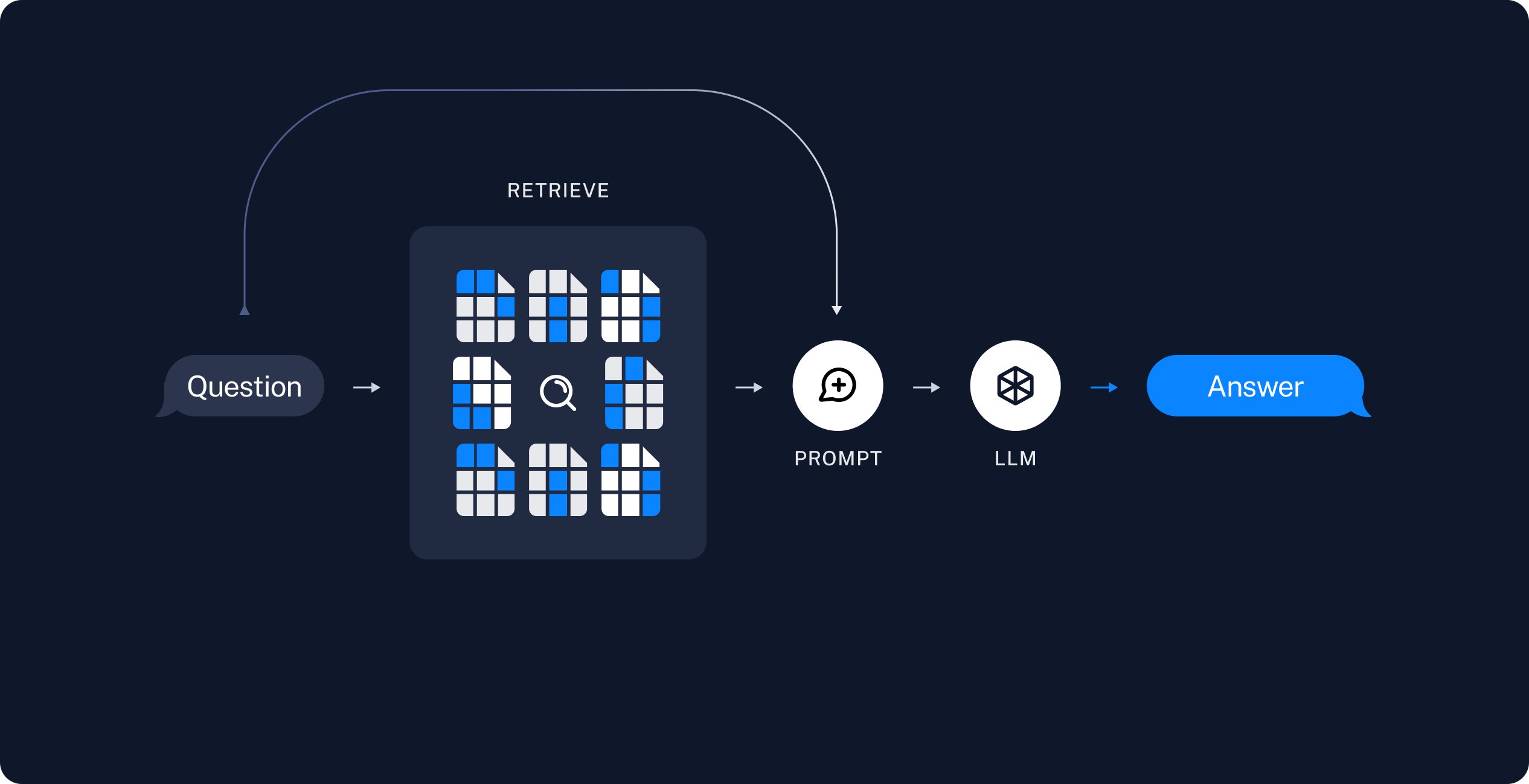

Query your document

Rivalz provides a simple and easy-to-use API to query your documents and using the Google gemini-1.5-pro LLM

Pre-requisites

- You need to have an API secret key to authenticate your request. If you don’t have one, please refer to the Authenticate and secret keys section to get one.

- You need to have a knowledge base created. If you don’t have one, please refer to the Create a knowledge base section to create one.

Query your document

Get the knowledge base ID

- When a knowledge base is created, it now can be used as an embedding model to query your documents. Rivalz use gemini-1.5-pro LLM to query your documents.

- After create knowledge base you can get the

knowledge_base_idfrom the response.It is a unique identifier for your knowledge base.Use thisknowledge_base_idto query your documents by create a AI agent conversation. - Python

# rest of the code

knowledge_base = client.create_rag_knowledge_base('sample.pdf', 'knowledge_base_name')

knowledge_base_id = knowledge_base['id']

print(knowledge_base_id)

# rest of the code

- Node.js

// rest of the code

const knowledgeBase = await client.createRagKnowledgeBase('sample.pdf', 'knowledge_base_name');

const knowledgeBaseId = knowledgeBase.id;

console.log(knowledgeBaseId);

// rest of the code

Create a conversation

- You can ask information about your document by creating a conversation with the AI agent. The AI agent will answer your question based on the knowledge base you created.

- Call the

create_chat_sessionmethod with theknowledge_base_idand the question you want to ask. - Python

conversation = client.create_chat_session(knowledge_base_id, 'what is the document about?')

print(f"AI answer: {conversation['answer']}")

- Node.js

const conversation = await client.createChatSession(knowledgeBaseId, 'what is the document about?');

console.log(`AI answer: ${conversation.answer}`);

- Along with the answer, you will also get the

session_idreturned fromcreateChatSessionwhich you can use to chain your conversation.

Chain your conversation

- Each time you ask a question the AI agent will update new context to the conversation. You can chain your conversation

by using the

session_idfrom the response of the previous conversation. And call thecreate_chat_sessionmethod again with thesession_idand the new question. - By chaining conversion you know have a chat agent that can answer multiple questions based on the context of the previous questions.

- Python

conversation = client.create_chat_session(knowledge_base_id, 'what is the document about?')

print(f"AI answer: {conversation['answer']}")

conversation_id = conversation['session_id']

conversation = client.create_chat_session(knowledge_base_id, 'Why did he do that?', conversation_id)

print(f"AI answer: {conversation['answer']}")

- Node.js

let conversation = await client.createChatSession(knowledgeBaseId, 'what is the document about?');

console.log(`AI answer: ${conversation.answer}`);

const conversationId = conversation.sessionId;

conversation = await client.createChatSession(knowledgeBaseId, 'Why did he do that?', conversationId);

console.log(`AI answer: ${conversation.answer}`);

Get your conversations

-

All your conversations along with their chat history are stored in the Rivalz database. You can get all your conversations by calling the

get_chat_sessionmethod with thesession_id. -

Python

response = client.get_chat_session(session_id)

print(response)

- Node.js

const response = await client.getChatSession(sessionId);

console.log(response);

This will return the chat history of the conversation.

- To get all conversations, use the

get_chat_sessionsmethod. - Python

response = client.get_chat_sessions()

print(response)

- Node.js

const response = await client.getChatSessions();

console.log(response);

Next steps

- Congratulations! You just build a simple AI agent that can answer questions based on the context of the previous questions.

- We provide more API to build your fully featured RAG application. You can read the full API of the document SDKs to build your fully featured RAG application.